Russias Stealthy Grip: Kremlins Secret Drone Intel Exposed

The recent surge in Russian drone attacks on Polish territory has raised concerns about the …

23. July 2025

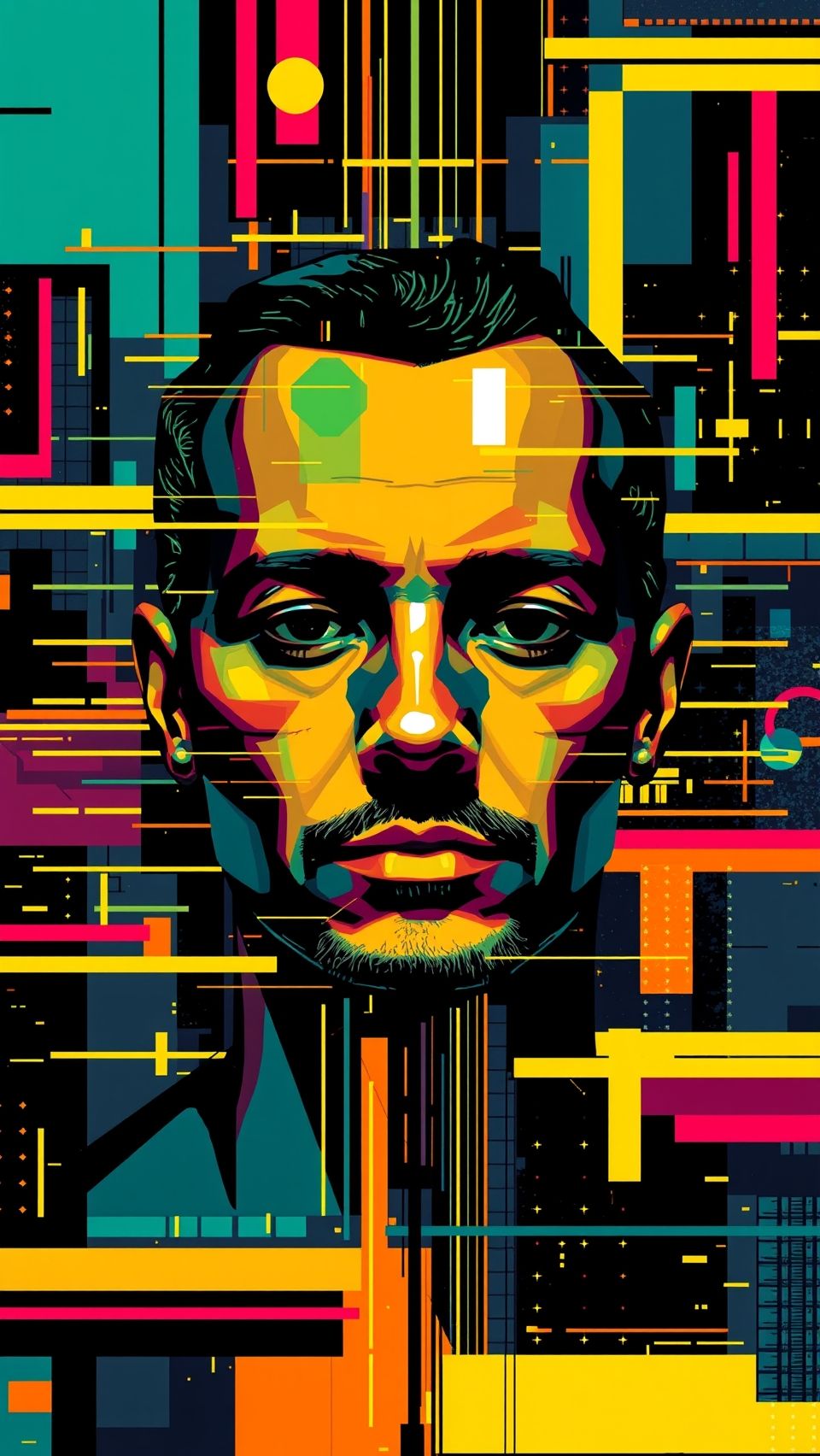

The Dark Side of Facial Recognition Technology: A Cautionary Tale of Wrongful Arrests and Police Accountability

On August 15, 2024, Robert Dillon was taken into custody by officers from the Jacksonville Sheriff’s Office and the Jacksonville Beach Police Department. The arrest was made possible by AI software used by the two departments, which claimed that Dillon was a “93 percent match” for surveillance footage of the actual suspect.

However, as Action News Jax, a local media outlet, investigated further, it became clear that the entire thing was a gross miscarriage of justice. According to a police report viewed by ANJax, Dillon’s photo was matched against similar images using AI technology, which then led to his identification as the suspect. The witnesses who were shown the footage also mistook Dillon for the actual suspect.

In body-cam video obtained by ANJax, both Dillon and his wife can be heard expressing their outrage and confusion as they are confronted with the accusation. “This is f**king nuts. God this is nuts,” Dillon is seen saying in the footage, adding that he had not left Fort Myers, the seat of Lee County, in two years.

The incident has raised serious questions about the reliability of AI-powered facial recognition technology and its potential to lead to wrongful arrests. The use of such technology has become increasingly common in law enforcement agencies across the country, which often relies on it to identify suspects based on vast amounts of surveillance footage and social media data.

While proponents of AI technology argue that it can be a valuable tool for law enforcement, critics point out that it is inherently flawed and prone to errors. The systems used by police departments are notoriously unreliable, with studies showing that they can produce false matches as often as 1 in 100.

Moreover, the use of AI-powered facial recognition raises serious concerns about accountability and due process. In Dillon’s case, the two police departments involved continuously passed the buck onto each other, refusing to take responsibility for their actions.

Experts argue that the use of AI-powered facial recognition raises significant constitutional concerns. “Police are not allowed under the Constitution to arrest somebody without probable cause,” said Nate Freed-Wessler, deputy director of the ACLU’s Speech, Privacy, and Technology Project. “And this technology expressly cannot provide probable cause, it is so glitchy, it’s so unreliable. At best, it has to be viewed as an extremely unreliability lead because it often, often gets it wrong.”

The incident highlights the need for greater transparency and accountability in law enforcement agencies that rely on AI technology. While it is essential to explore the potential benefits of AI-powered tools, it is equally crucial to ensure that they are used in a way that respects due process and protects the rights of innocent individuals.

In fact, studies have shown that facial recognition errors can result in 28% of incorrect matches, with some estimates suggesting that this number could be as high as 50%. The use of AI-powered facial recognition raises broader concerns about surveillance and civil liberties.

Lawmakers and policymakers are starting to take notice of the incident. In recent months, several states have introduced bills aimed at regulating the use of AI-powered facial recognition technology by law enforcement agencies. Florida lawmakers are also exploring ways to improve accountability and oversight in police departments that rely on AI technology.

As the use of AI-powered facial recognition technology continues to grow, it is essential that law enforcement agencies prioritize due process and accountability over convenience and expediency. The case of Robert Dillon serves as a stark reminder of the dangers of relying on flawed technology and highlights the need for greater scrutiny and oversight of the role of AI in policing.

In recent years, there have been several high-profile cases of wrongful arrests and convictions attributed to flawed facial recognition technology. These incidents have sparked widespread debate about the ethics of facial recognition technology and its potential impact on civil liberties.

The incident involving Robert Dillon has raised questions about the role of AI-powered facial recognition in law enforcement agencies and the need for greater accountability and regulation. As policymakers continue to grapple with these issues, it is essential that they prioritize transparency, due process, and public safety.

Moreover, the use of AI-powered facial recognition raises broader concerns about surveillance and civil liberties. While proponents argue that the technology can help combat crime, critics point out that it is often used for purposes unrelated to law enforcement, such as social media monitoring and advertising tracking.

As we move forward, it is essential that policymakers take a nuanced approach to regulating AI-powered facial recognition technology, balancing its potential benefits with its risks and limitations. Only then can we ensure that this powerful tool is used in a way that respects the rights of all individuals, rather than serving as a means of error and abuse.

In 2020, a study by the National Institute of Justice found that facial recognition errors resulted in 28% of incorrect matches. The incident involving Robert Dillon highlights the need for greater scrutiny and oversight of the role of AI in policing.

The case of Robert Dillon serves as a stark reminder of the dangers of relying on flawed technology and highlights the need for greater accountability and regulation. As we move forward, it is essential that policymakers prioritize transparency, due process, and public safety.

Key Takeaways:

The Dark Side of Facial Recognition Technology: A Cautionary Tale of Wrongful Arrests and Police Accountability